Proposal Summary

This proposal, if passed, will require the ssv.network DAO Multi-Sig Committee (hereinafter: “MC”) to batch and execute relevant transactions to update relevant smart contracts to enable operators on the ssv.network to run operators with 3000 validators per operator, from the previously available 1000 validators per operator.

Motivation

Since DIP-29, operators were limited to having only 1000 validators per operator. This was due to the DAO’s research showing that at that point in time, having a higher limit would result in an unreasonable resource usage on operator machines and would make the ssv.network less efficient as a whole. However, this limit increase has been requested by both the professional and solo operator community for a very long time to be ever higher.

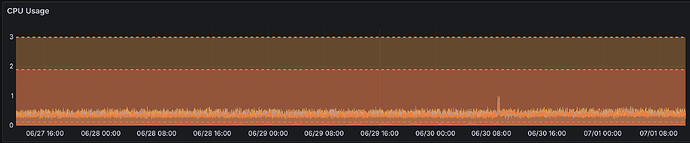

Therefore, since December 2023, and subsequently since DIP-29, the DAO has understood that this limit needed to be raised. With this in mind, the DAO has been hard at work to optimize resource usage and increase this cap in order to reduce operational overhead for operators and satisfy the ssv.network community’s needs. This has been achieved with the new Alan fork, which went live at the end of 2024. Now, with sufficient statistical data, the cap of 1000 can be raised to 3000 validators per operator while maintaining the existing computational resources used. This effectively reduces operator overhead.

This reduction in overhead will result in more streamlined operations for operators, less cost and potentially improved performance across the board.

Proposal particulars

- Execution Parameters

Execution Parameters

The proposed change enables operators to manage 3.000 validators.

To perform this change, the MC will invoke a specific function (already implemented in the SSV Network contract) with the parameter 3000 in a single transaction. This function can be called only by the owner of the contract, that is the MC.